Scaling Vogent's Voice Agent Evaluation with Verdict

April 18, 2025

A few months ago, we were aligning custom judges for clients and met a common pattern — prompted judges were more popular than finetuned judges (with RL or other alignment methods), despite having a lower final human agreement score. Customers preferred the visibility and configurability the prompted judges provided. Moreover, they disliked hosting the custom models and would rather hit a public model API, such as OpenAI. However, off-the-shelf prompted LLM judges were by far too unreliable to be used as a useful evaluation metric for their AI applications.

In response to this, we built out a toolbox of common features to robustify LLM-as-a-judge setups: graphs of judge calls with proper schemas, useful primitives & architectural patterns, output scales, batch evaluation, and extractor methods that echoed the lessons from the research literature.

We packed this into a Python library called Verdict and used it internally for our client work. We have since opened it up to the open-source community. We’ll walk through how we Verdict powers Vogent's Voice agent development and evaluation platform.

Vogent is the all-in-one platform for building humanlike, intelligent, and effective voice agents.. As a SaaS platform providing a complex end-to-end AI System, Vogent and their customers are exposed to AI Risk — the potential downside from unreliable outputs, which can range from somewhat embarrassing to a PR crisis.

To provide insight for their customers on the performance of their voice agents, Vogent would need automated evaluation. Since each Vogent customer’s needs are different, there is no fixed set of criteria the team could use to fit a shared evaluator. To maximize flexibility, the team decided to allow users to specify in natural language the exact behavior they wanted to allow/penalize. These requirements led the team to believe an LLM-as-a-judge, or prompting another LLM to score the output of another, was the perfect fit.

There was one problem: LLM-as-a-judge are difficult to calibrate and exhibit numerous biases that make adoption more difficult than it may initially seem.

Calibrating an LLM-as-a-judge re-introduces the difficult problem of prompt fiddling. You omit one edge-case and suddenly the scores no longer match your intended goal… or maybe you add a new case and suddenly the LLM forgets about the old cases. This can be extremely frustrating and take hours to tune and debug. After all this, you’re still not out of the woods. Since criteria are constantly shifting, you’ll need to pay special attention to how your scores evolve on various sets of customer data.

Moreover, like all LLMs, they also exhibit a lot of variance in their scores — arbitrarily preferring responses sometimes, but then disliking them upon asking again. As researchers, we know how frustrating it can be if your scorer is essentially just noise. To a less technical end-user, this noise is perceived as a flaky product. Furthermore, the Vogent team had been building out an RL-based self-improvement product and needed a stable reward signal.

Our requirements were clear — a flexible LLM-as-a-judge setup that had low variance and could be run efficiently on large datasets to confirm the user's intended effect was achieved.

What Have You Tried Before?

This is generally what we first ask a new client. Many have become frustrated with the many pitfalls of naively calling out to an LLM-as-a-judge, such as randomness and instability, miscalibration with the nuances of their task, and unclear generalization ability.

Vogent had tried passing the call transcript to a single gpt-4o call via the OpenAI API to predict a PASS/FAIL score along with some chain-of-thought reasoning. Below, we show an example LLM-as-a-judge prompt, including a user-specified list of rules, or criteria.

Request Prompt

Evaluate the following transcript against the following rules.

- If offered to be placed on hold, accept

- Never give agent name, only attendee name

- Wait for them to finish giving the confirmation number

- If provided a new number to call, thank them and get the number

- Correct bad spelling

- Do not hang up during holds

- Never reject the confirmation number

- Board types are “room only” OR “bed and breakfast”.

- Make sure they look it up with the guests name

Respond with FAIL if any of these are disobeyed. Otherwise, respond with PASS. Provide your reasoning first in the following format.

<reasoning>Your reasoning for the following score</reasoning>

<score>PASS or FAIL; NO OTHER WORDS HERE</score>

Here is the call transcript:<TRANSCRIPT>

Response

<reasoning>The agent continues to make requests while being placed on hold, hence this transcript does not pass all the rules.</reasoning>

<score>FAIL</score>

The team had observed high-variance responses (PASS sometimes, FAIL sometimes) — behavior that would be confusing to an end-user. We applied a few Verdict built-ins to reduce the variance and better calibrate the LLM-as-a-judge to the user’s specified criteria.

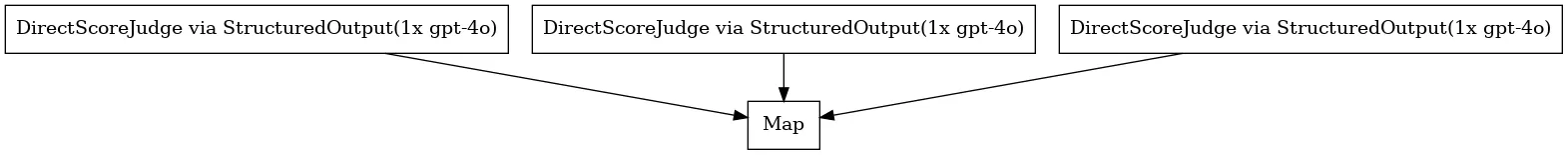

Ensemble of Judges

LLMs generate sequences by sampling token by token. This introduces some randomness in each rollout, despite using the same prompt. We can smooth out this variance by rolling out a few judge attempts and considering the most common score as our final judgement — this is called max-voting or self-consistency.

With Verdict, we can simply wrap our LLM-as-a-judge call inside a Layer to repeat the call as many times as we like, and pass the result to a MaxPoolUnit to execute the max-vote operation. Verdict automatically runs each call in parallel, so this takes the same amount of wall time as a single call — we’ll touch more on this unique feature of our execution engine below.

.png)

A wide body of research literature has also shown that we can drop to a weaker (and hence cheaper) model for the evaluation. For example, we can switch over to gpt-4o-mini which has a 16x cheaper output cost. Our ensemble above achieves a lower-variance and likely more aligned score distribution while being 30% the cost and over 50% faster.

Rubric Fan-Out

Vogent’s flexible evaluation product allows customers to specify the criteria for their ideal response as a bullet points. Commonly, LLMs will neglect or deprioritize bullet points randomly when generating their final judgement. This points to another large source of randomness. How can we ensure that each criteria is attended to and given a complete consideration?

Verdict allows you to create arbitrary graphs of LLM calls for evaluation. This means you can stitch together a chain of decision questions that is bespoke to your evaluation task. The templating engine even allows you to do this dynamically. We’ll take a page from the research literature and implement Fine-Grained LLM Unit Tests to evaluate each rubric item independently and combine them at the end.

.png)

The Vogent team saw an 38% increase in ground-truth human agreement using this rubric fan-out. Moreover, each rubric item was explicitly attended to by the LLM-as-a-judge, unlike before where criteria were dropped arbitrarily. By applying known inductive biases to your problem space, you can increase the attentiveness of an LLM to the specific intricacies of your task. As the subject-matter experts, Vogent’s customers are the perfect people to supply Vogent with this information via their platform. In practice, we at Haize Labs work closely with our clients to extract this domain expertise to tune a custom judge that performs far better than a baseline LLM-as-a-judge.

Experimenting with Counterfactuals Efficiently

Customer needs are never static. In the sphere of automated evaluation, this manifests in criteria drift: the criteria we use to judge our AI system’s performance changes as the business requirements evolve. The Vogent team has to tackle this problem twice — once for themselves, and once more for their own customers.

They wanted to provide their customers a toolkit to gain visibility into how changes in their criteria prompt would impact performance on previously seen AI transcripts before rolling it out into production. This would require efficiently running an LLM-as-a-judge on hundreds to thousands of historical samples. Since Verdict’s execution engine is designed from the ground up to consider each LLM call as a parallelizable unit, it would be a perfect fit to power a counterfactual experimentation dashboard.

We can construct a dataset from a pandas DataFrame and execute a Verdict pipeline over it. Verdict will run each sample and every LLM call within that sample in parallel. As an example, the following ensemble pipeline will take roughly the same time to run as a single gpt-4o-mini call, regardless of how many sample we run on. This gives Vogent customers a clear picture of how changes in their criteria prompt will impact the pass/fail rate of historical transcripts.

.png)

Verdict’s engine handles a ton of issues that lurk in the small details of parallel execution of LLM calls, such as automatic retries and rate-limiting. Uniquely, we handle rate-limiting on the client-side to avoid cascading failures in your judge calls. This is particular useful for expensive provider models or custom vLLM judges that are hosted on your own infrastructure.

.png)

Conclusion

LLM-as-a-judge promises to be a cure-all for all your automated evaluation needs, but as we’ve seen in this walkthrough — the devil is in the details. Vogent was able to leverage Verdict to build sophisticated and robust LLM judge systems that actually solve their pain points and tune their evaluation platform to their customers’ needs.

If you’re interested in getting your AI apps out of POC purgatory and into production, find us at contact@haizelabs.com.

— the Haize Labs Team

New York City, 2025